K-Nearest Neighbors Classifier

Published on 06/25/20

2 min read

In category

Data_Science

Using A KNN Classifier

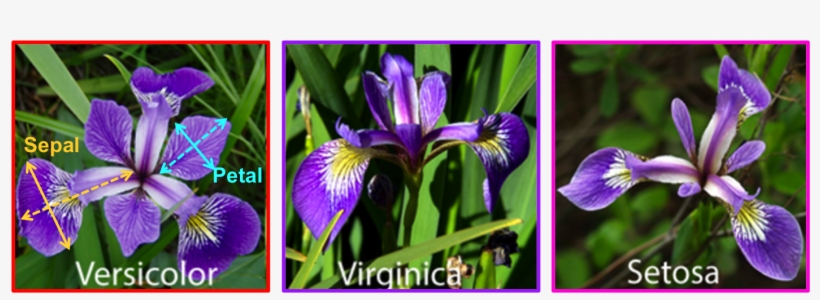

K-nearest neighbor classifier is considered a supervised machine learning algorithm. It is used to solve classification or regression challenges in a data analysis project. One example of a classification challenge is demonstrated in the “iris flower” dataset. The dataset contains data records of different iris plants and their physical measurements as well as the class or type of iris flower it belongs to. This dataset can be used to train a KNN algorithm that can classify the type of a new flower based on its physical properties.

What is the algorithm doing?

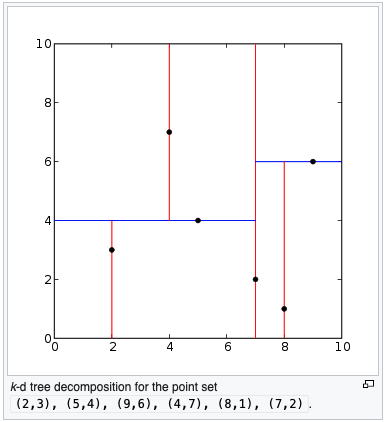

The K-nearest neighbors (KNN for short) algorithm expects a “K” parameter and its input training data to be vector representations of the data features being analyzed. The “K” refers to the number of neighbors to group by when training and storing the input data. The distance between the data inputs are measured, using different metrics such as Euclidean distance, and stores them into memory.

Implementing A Simple KNN in python

Dependencies:

- KD tree data structure from scipy

- Counter from python collection

Description:

KNN algorithm as a python class

Takes k parameter in class instantiation

- Fit method

- load X and y inputs into class variables

- pass X into the KD tree as class variable

- Predict method

- For each predict input query and save nearest_neighbors to class variable

- Create a list of labels from the nearest_neighbors

- Return the result of majority vote method on nearest_neighbors

- Majority vote method

- Use counter to count all labels of nearest_neighbors

- Use the most_common method

- repeat counting for all labels

- kneighbors method

- Does predict function and returns the nearest_neighbor class variable